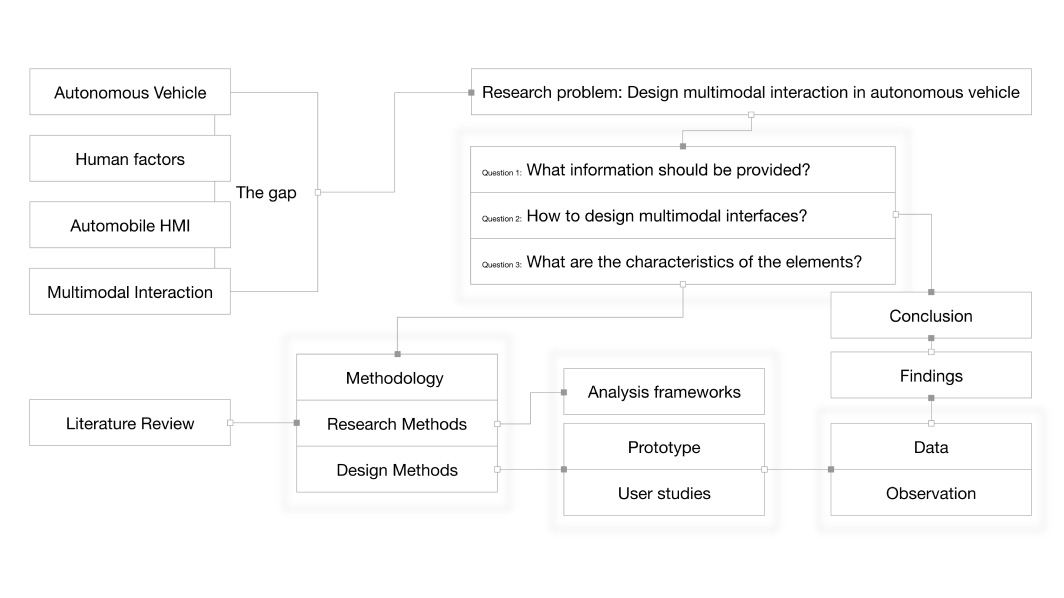

In 2017, I embarked on a research project under the supervision of Dr. Luke Hespanhol, focusing on the usability challenges in the future automotive industry. This three-month endeavor aimed to explore the human-vehicle interaction, specifically multimodal interaction, in autonomous vehicles. The goal was to determine the most effective information provision and presentation methods in Level 3 autonomous systems, ensuring optimal driver performance and situational awareness.

Within this project, two multimodal interfaces were developed for a partially autonomous system, aligning with the taxonomy and definition of autonomous vehicles outlined by SAE International. A user test was carefully designed and conducted to compare the drivers' situation awareness, performance, and acceptance of these two interfaces. Through rigorous analysis of the test results, this research essay draws conclusions regarding the design of multimodal human-machine interfaces (HMIs) for autonomous vehicles.

By considering the specific requirements of multimodal HMI, this research enhances the potential for improved user experience, driver performance, and overall acceptance of autonomous vehicles.

It is argued by technology and automotive companies that autonomous technology is the ultimate solution to safety issues, since autonomous drive system performs much better than human drivers do (waymo, 2016).

Technology such as touchscreen have been widely employed in the automotive industry. On one hand, these displays provide more information that can be beneficial to driving safety. On the other hand, the increasing in-vehicle interfaces burdens the metal workload of drivers extensively (Beruscha, Brosi, Krautter, & Altmüller, 2016), not to mention the inclusive use of mobile phone while driving is undeniable.

Multimodal interaction system has the potential of increasing task efficiency (Dumas, Lalanne, & Oviatt, 2009) and may enhance both speed and quality when human processing information (Van Wassenhove, Grant, & Poeppel, 2005). According to Oviatt (1999).

The human-machine interaction mainly depends on the types of cooperation between human and machine, which is described by the level of automation (LOA) (Parasuraman, Sheridan, & Wickens, 2000). High level automation taking over the decision making and controls might raise concerns of safety and trust (Parasuraman & Riley, 1997).

According to Oviatt (1999), to design a multimodal interaction system, it is essential to understand the property and characteristics of different modes, how user would like to interact multimodally and the advantage of multimodal systems against unimodal ones.

It is suggested that human factors are essential in human-and-automation cooperation system (Salvendy, 2012) and poorly designed interactions can result in accidents (Parasuraman & Riley, 1997). Applying conventional HMI might raise safety issues (Klauer et al., 2006) and damage user experience.

Multimodal interaction system has the potential of increasing task efficiency (Dumas et al., 2009) and may enhance both speed and quality when human processing information (Van Wassenhove et al., 2005).

This essay employs the research through design methodology, as proposed by Zimmerman, Forlizzi, and Evenson (2007). Following the identification of research gaps and the formulation of a research problem, the objective is to generate research artifacts by designing multiple interface concepts. User tests are then conducted on these concepts, and the results are analyzed to derive insights.

By applying the research through design approach, this essay seeks to go beyond traditional research methods and instead focuses on actively designing and prototyping interface concepts. Through the user tests and subsequent analysis, valuable data and observations are gathered to inform the research findings.

The research method employed in this study involves a simulation setup comprising several components. These include a large screen displaying the driving scenario, two smaller screens showcasing the instrument panel and dashboard interfaces, a laptop controlled by the researcher to manage scenarios and display information, and a steering wheel and pedal simulation setup.

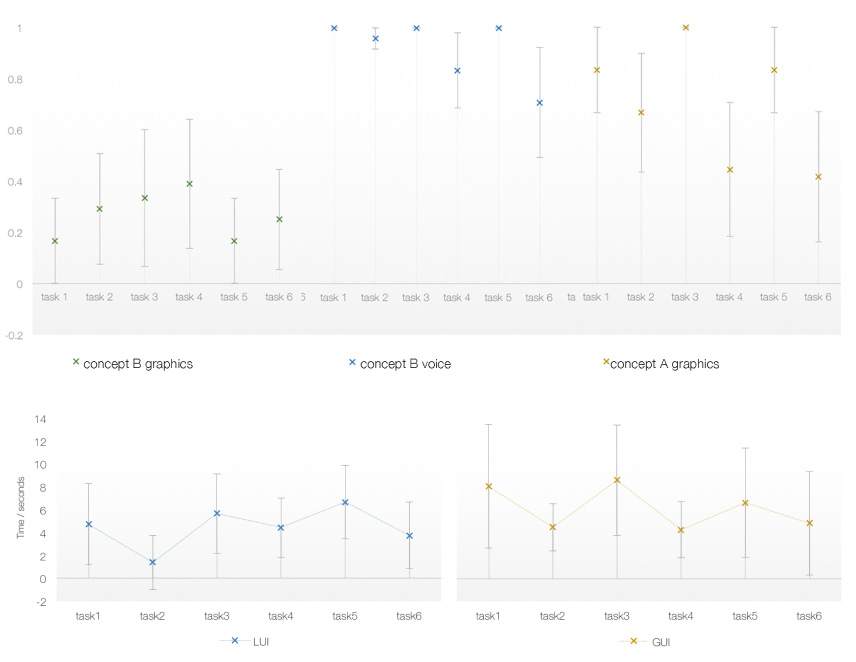

To compare the effectiveness of different interface concepts, two concepts, namely Concept A (a graphic interface) and Concept B (with voice interaction), are tested. The evaluation consists of six tasks that participants are required to perform using the respective interface concepts.

User testing was conducted in a driving simulation environment with real-world scenario videos, two touchscreens, a steering wheel, and pedals. Participants performed six tasks using both interface concepts in alternating order. The researcher administered the SAGAT test to assess situational awareness and asked questions during the test as a distraction. Reaction times were measured using a Max MSP patch. A post-test questionnaire gathered participant feedback. This comprehensive user testing approach provided valuable insights into performance, situational awareness, and user impressions.

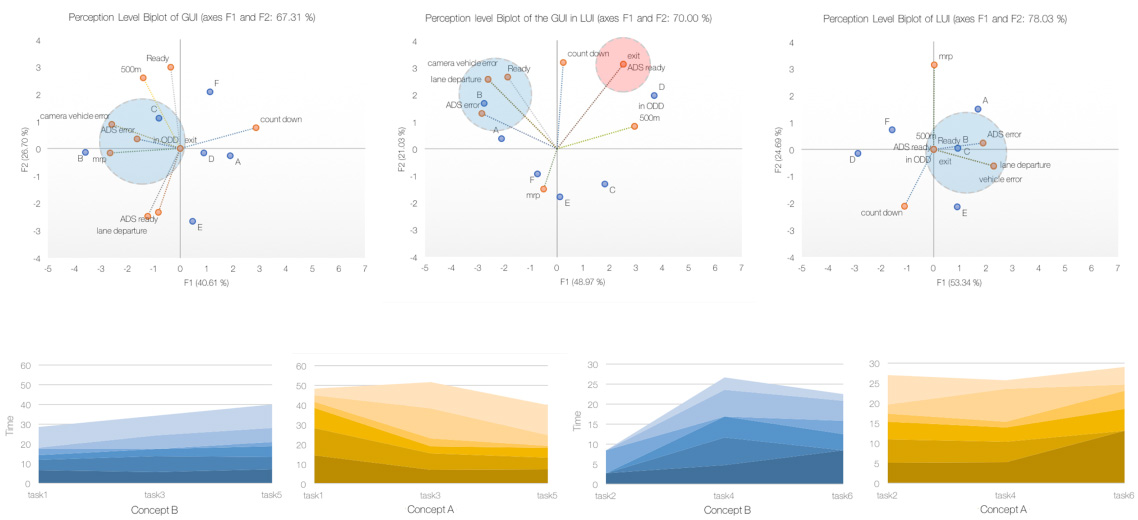

Data was collected across three key aspects: situational awareness (SA), user performance, and concept acceptance.By collecting data on these three aspects, a comprehensive understanding of the effectiveness and user reception of the interface concepts was achieved.

For situational awareness, scores were calculated for each element of both interface concepts by comparing participant responses with the actual situation presented in the simulation.

User performance data, such as reaction times, was recorded by the prototype during the testing sessions. This data was collected and analyzed at a later stage to provide insights into participants' response times.

Concept acceptance data was gathered through post-test questionnaires, which allowed participants to express their perceptions and preferences regarding the two interface concepts.

T-tests, Principle Component Analysis and some other tools are used to analyse the result. Some conclusions are drawn to facilitate multimodal HMI design.

Multimodal interface doesn’t gunrantee that user will interact multimodally. (Parasuraman & Riley, 1997) Graphic information need more time to comprehend than speech. However, after participants learns the pattern, it’s as effective as speech. Speech is easier to comprehend and more dominant. And there are evidence that speech is more efficient and accountable at certain scenarios.